In this paper, Bostrom describes his **"Simulation Argument"**. Thi...

Nick Bostrom is a Swedish philosopher at the University of Oxford. ...

Here is an interview with with Nick Bostrom at the Future of Humani...

This subject gained attention recently due to an interview with Elo...

There are mentions to simulated worlds in pop culture that date as ...

*Thinking is a function of man's immortal soul [they say.] God has ...

**Substrate-Independence:** Substrate-Independence is a term used t...

There is a lot of debate about whether we will be able to simulate ...

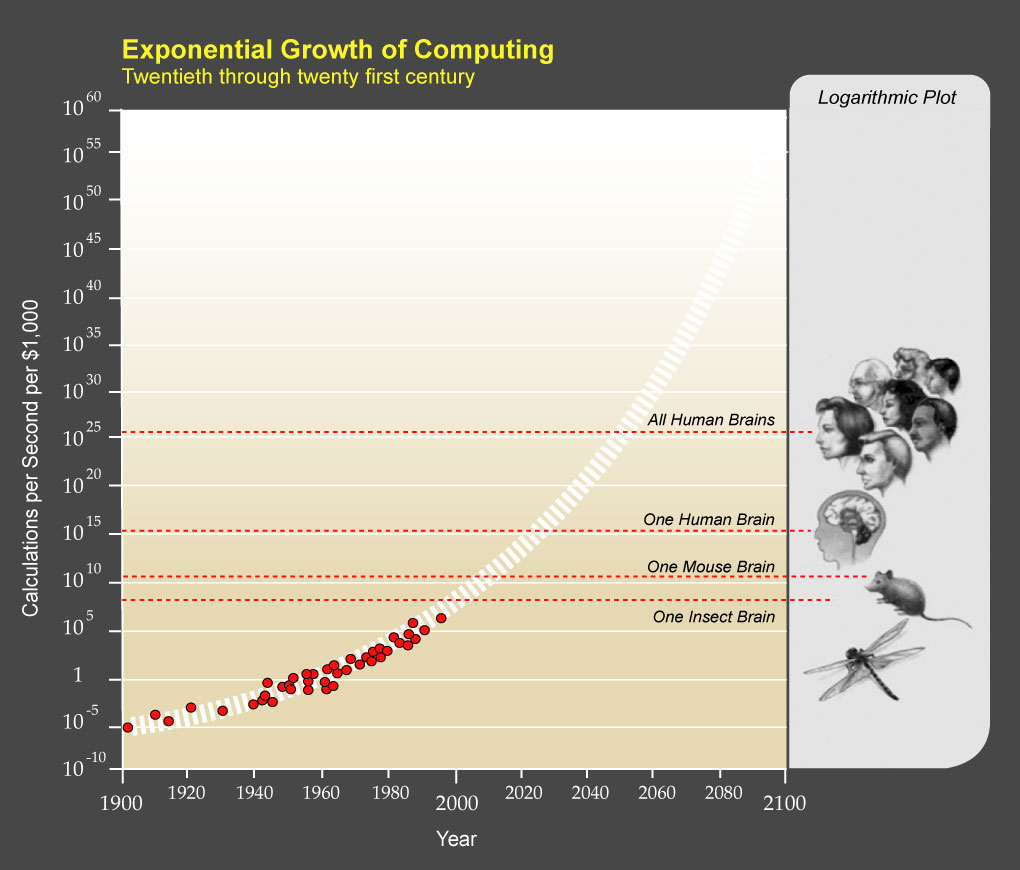

Ray Kurzweil predicts that by 2029 a personal computer will be 1,00...

The simulation argument is time independent. It does not matter tha...

The author invokes new physical phenomena yet to be discovered and ...

There are $10^{11}$ neurons in a human brain operate by sending el...

In the late 1980s Thomas K. Landauer performed a study in order to ...

We can easily compute this number of oprations.

Let's assume th...

As you go deeper into the simulation and you consider multi-level s...

The author develops a probabilistic consideration of the argument o...

**(1)** $f_p \approx 0 \Rightarrow $ *"The fraction of human-level...

**The Brain in a Vat** thought-experiment is most commonly used to ...

Couldn't you consider the other limiting case with decreasing fract...

We could go extinct trying to reach posthuman stage. Here is a vide...

Running ancestor simulations is either banned by future civilizatio...

Humans experience the world through their senses. What we call "rea...

If we believe that we are in a simulation and that our simulators a...

This is an interesting argument against the multi-level hypothesis....

The author makes a good analogy with religion. **The creators of an...

**The simulation hypothesis cannot be proven or disproven**. It als...

1

AREYOULIVINGINACOMPUTERSIMULATION?

BYNICKBOSTROM

[PublishedinPhilosophicalQuarterly(2003)Vol.53,No.21 1,pp.243‐255.(Firstversion:2001)]

Thispaperarguesthatatleastoneofthefollowingpropositionsistrue:(1)

the human species is very likely to go extinct before reaching a

“posthuman” stage; (2) any posthuman civilization is extremely unlikely

torunasignificantnumberofsimulationsoftheirevolutionaryhistory(or

variations thereof); (3)

we are almost certainly living in a computer

simulation.It follows thatthebeliefthatthereisa significant chance that

we will one day become posthumans who run ancestor‐simulations is

false, unless we are currently living in a simulation. A number of other

consequencesofthisresultare

alsodiscussed.

I.INTRODUCTION

Manyworksofsciencefictionaswellassomeforecastsbyserioustechnologists

and futurologists predict that enormous amounts of computing power will be

available in the future. Let us suppose for a moment that these predictions are

correct. One thing that later generations might

do with their super‐powerful

computers is run detailed simulations of their forebears or of people like their

forebears.Becausetheircomputerswouldbesopowerful,theycouldrunagreat

many such simulations. Suppose that these simulated people are conscious (as

they would be if the simulations were sufficiently fine

‐grained and if a certain

quite widely accepted position in the philosophy of mind is correct). Then it

could be the casethatthevast majority of minds like ours do not belong tothe

original race but rather to people simulated bythe advanced descendants of an

originalrace.

Itisthenpossibletoarguethat,ifthiswerethecase,wewouldbe

rational to think that we are likely among the simulated minds rather than

among the original biological ones. Therefore, if we don’t think that we are

currentlylivingina computersimulation,wearenotentitled

tobelievethatwe

will have descendants who will run lots of such simulations of their forebears.

Thatisthebasicidea.Therestofthispaperwillspellitoutmorecarefully.

2

Apartformtheinterestthisthesismayholdforthosewhoareengagedin

futuristic speculation, there are also more purely theoretical rewards. The

argument provides a stimulus for formulating some methodological and

metaphysical questions, and it suggests naturalistic analogies to certain

traditional religious conceptions, which some may find

amusing or thought‐

provoking.

Thestructureofthepaperisasfollow s.First,weformulateanassumption

thatweneedtoimportfromthephilosophyofmindinordertogettheargument

started. Second, we consider some empirical reasons for thinking that running

vastly many simulations of human minds

would be within the capability of a

future civilization that has developed many of those technologies that can

already be shown to be compatible with known physical laws and engineering

constraints.Thispartisnotphilosophicallynecessarybutitprovidesanincentive

for paying attention to the rest. Then follows the

core of the argument, which

makes use of some simple probability theory, and a section providing support

foraweak indifference principle thattheargument employs.Lastly, wediscuss

someinterpretationsofthedisjunction,mentionedintheabstract,thatformsthe

conclusionofthesimulationargument.

II.THEASSUMPTIONOF

SUBSTRATE‐INDEPENDENCE

A common assumption in the philosophy of mind is that of substrate‐

independence.Theideaisthatmentalstatescansuperveneonanyofabroadclass

of physical substrates. Provided a system implements the right sort of

computational structures and processes, it can be associated with

conscious

experiences. It is not an essential property of consciousness that it is

implemented on carbon‐based biological neural networks inside a cranium:

silicon‐basedprocessorsinsideacomputercouldinprincipledothetrickaswell.

Argumentsfor thisthesishavebeengiven intheliterature,andalthough

itis

notentirelyuncontroversial,weshallheretakeitasagiven.

The argument we shall present does not, however, depend on any very

strong version of functionalism or computationalism. For example,weneednot

assume that the thesis of substrate‐independence is necessarily true (either

analyticallyormetaphysically)–just

that,infact,acomputerrunningasuitable

program would be conscious. Moreover, we need not assume that in order to

createamindon a computer it would be sufficient toprogram it in such away

that it behaves like a human in all situations, including passing the Turing

test

etc.Weneedonlytheweakerassumptionthatitwouldsufficeforthegeneration

ofsubjectiveexperiences that the computational processesof ahumanbrainare

structurally replicated in suitably fine‐grained detail, such as on the level of

3

individual synapses. This attenuated version of substrate‐independenceis quite

widelyaccepted.

Neurotransmitters, nerve growth factors, and other chemicals that are

smallerthanasynapseclearly playa roleinhumancognitionandlearning.The

substrate‐independencethesis isnotthattheeffectsofthese chemicalsaresmall

orirrelevant,

butratherthattheyaffectsubjectiveexperienceonlyviatheirdirect

orindirectinfluenceoncomputationalactivities.Forexample,iftherecanbeno

difference in subjective experience without there also being a difference in

synapticdischarges,thentherequisitedetailofsimulationisatthesynapticlevel

(orhigher).

III.THETECHNOLOGICALLIMITSOFCOMPUTATION

At our current stage of technologicaldevelopment, we have neither sufficiently

powerful hardware nor the requisite software to create conscious minds in

computers. But persuasive arguments have been given to the effect that if

technological progress continues unabated then these shortcomings will

eventually be

overcome. Some authors argue that this stage may be only a few

decades away.

1

Yet present purposes require no assumptions about the time‐

scale. The simulation argument works equally well for those who think that it

will take hundreds of thousands of years to reach a “posthuman” stage of

civilization, where humankind has acquired most of the technological

capabilities that one cancurrently show

tobeconsistentwithphysicallawsand

withmaterialandenergyconstraints.

Suchamaturestageoftechnologicaldevelopmentwillmakeitpossibleto

convert planets and other astronomical resources into enormously powerful

computers. It is currently hard to be confident in any upper bound on the

computing power that may

be available to posthuman civilizations. As we are

still lacking a “theory of everything”, we cannot rule out the possibility that

novel physical phenomena,notallowedforincurrentphysicaltheories,maybe

utilizedtotranscendthoseconstraints

2

thatinourcurrentunderstandingimpose

1

See e.g. K. E. Drexler, Engines of Creation: The Coming Era of Nanotechnology, London, Forth

Estate, 1985; N. Bostrom, “How Long Before Superintelligence?” International Journal of Futures

Studies, vol. 2, (1998); R. Kurzweil, The Age of Spiritual Machines: When computers exceed human

intelligence,NewYork,VikingPress,1999;H.

Moravec,Robot:MereMachinetoTranscendentMind,

OxfordUniversityPress,1999.

2

Such as the Bremermann‐Bekenstein bound and the black hole limit (H. J. Bremermann,

“Minimumenergyrequirements of information transfer and computing.” InternationalJournalof

TheoreticalPhysics 21: 203‐217(1982);J. D. Bekenstein, “Entropy contentand informationflowin

systemswithlimitedenergy.”PhysicalReviewD30:1669‐1679

(1984);A.Sandberg,“ThePhysics

4

theoretical limits on the information processing attainable in a given lump of

matter. We can with much greater confidence establish lower bounds on

posthuman computation, by assuming only mechani sms that are already

understood.Forexample,EricDrexlerhasoutlinedadesignforasystemthesize

of a sugar cube

(excluding cooling and power supply) that would perform 10

21

instructionspersecond.

3

Anotherauthorgivesaroughestimateof10

42

operations

per second for a computerwitha mass on order of alarge planet.

4

(If wecould

createquantumcompu ters,orlearntobuildcomputersoutofnuclearmatteror

plasma, we could push closer to the theoretical limits. Seth Lloyd calculates an

upperboundfora1kg computerof5*10

50

logi cal operations persecondcarried

out on ~10

31

bits.

5

However, it suffices for our purposes to use the more

conservativeestimatethatpresupposesonlycurrentlyknowndesign‐principles.)

The amount of computing power needed to emulate a human mind can

likewise be roughly estimated. One estimate, based on how computationally

expensive it is to replicate the functionality of a

piece of nervous tissuethatwe

have already understood and whose functionality has been replicated in silico,

contrastenhancementintheretina,yieldsafigureof~10

14

operationspersecond

for the entire human brain.

6

An alternative estimate, based the number of

synapses in the brain and their firing frequency, gives a figure of ~10

16

‐10

17

operationspersecond.

7

Conceivably,evenmorecouldberequiredifwewantto

simulate in detail the internal workings of synapses and dendritic trees.

However,itislikelythatthehumancentralnervoussystemhasahighdegreeof

redundancy on the mircoscale to compensate forthe unreliability and noisiness

ofits neuronal

components.Onewouldtherefore expect a substantialefficiency

gainwhenusingmorereliableandversatilenon‐biologicalprocessors.

Memory seems to be a no more stringent constraint than processing

power.

8

Moreover,sincethemaximumhumansensorybandwidthis~10

8

bitsper

second, simulating all sensory events incurs a negligible cost compared to

simulating the cortical activity. We can therefore use the processing power

of Information Processing Superobjects: The Daily Life among the Jupiter Brains.” Journal of

EvolutionandTechnology,vol.5(1999)).

3

K. E. Drexler, Nanosystems: Molecular Machinery, Manufacturing, and Computation, New York,

JohnWiley&Sons,Inc.,1992.

4

R. J. Bradbury, “Matrioshka Brains.” Working manuscript (2002),

http://www.aeiveos.com/~bradbury/MatrioshkaBrains/MatrioshkaBrains.html.

5

S.Lloyd,“Ultimatephysicallimitstocomputation.”Nature406(31August):1047‐1054(2000).

6

H.Moravec,MindChildren,HarvardUniversityPress(1989).

7

Bostrom(1998),op.cit.

8

Seereferencesinforegoingfootnotes.

5

required to simulate the central nervous system as an estimate of the total

computationalcostofsimulatingahumanmind.

If the environment is included in the simulation, this will require

additionalcomputingpower–howmuchdependsonthescopeandgranularity

of the simulation. Simulating the entire universe

down to the quantum level is

obviouslyinfeasible, unless radically new physics is discovered. But in order to

get a realistic simulation of human experience, much less is needed – only

whateverisrequiredtoensurethatthesimulatedhumans,interactinginnormal

human ways with their simulated environment, don’t

notice any irregularities.

ThemicroscopicstructureoftheinsideoftheEarthcanbesafelyomitted.Distant

astronomicalobjectscanhavehighlycompressedrepresentations:verisimilitude

need extend to the narrow band of properties that we can observe from our

planetorsolarsystemspacecraft.OnthesurfaceofEarth,macroscopicobjects

in

inhabited areas may need to be continuously simulated, but microscopic

phenomena could likely be filled in ad hoc. What you see through an electron

microscope needs to look unsuspicious, but you usually have no way of

confirming its coherence with unobserved parts of the microscopic world.

Exceptions arise when

we deliberately design systems to harness unobserved

microscopicphenomenathatoperateinaccordancewithknownprinciplestoget

resultsthatweareabletoindependentlyverify.Theparadigmaticcaseofthisis

a computer. The simulation may therefore need to include a continuous

representationofcomputersdowntothelevel

ofindividuallogicelements.This

presents no problem, since our current computing power is negligible by

posthumanstandards.

Moreover,aposthumansimulatorwouldhaveenough computing power

to keep track of the detailed belief‐states in all human brains at all times.

Therefore, when it saw that a human was about

to make an observation of the

microscopic world, it could fill in sufficient detail in the simulation in the

appropriatedomainon an as‐needed basis.Should anyerror occur, thedirector

couldeasilyeditthestates ofanybrainsthathavebecomeawareofananomaly

before it spoils

the simulation. Alternatively,the director could skip back a few

secondsandrerunthesimulationinawaythatavoidstheproblem.

It thus seems plausible that the main computational cost in creating

simulations that are indistinguishable from physicalreality for human minds in

thesimulationresidesinsimulatingorganic

brainsdowntotheneuronalorsub‐

neuronallevel.

9

Whileitisnotpossibletogetaveryexactestimateofthecostofa

realistic simulation of human history, we can use ~10

33

‐10

36

operations as a

9

As webuild moreand faster computers,thecostofsimulating ourmachinesmighteventually

cometodominatethecostofsimulatingnervoussystems.

6

roughestimate

10

.Aswe gain moreexperience with virtual reality,we will get a

better grasp of the computational requirements for making such worlds appear

realistic to their visitors. But in any case, even if our estimate is off by several

ordersofmagnitude,thisdoesnotmattermuchforourargument.

Wenotedthat

a rough approximation of the computational power of a planetary‐mass

computer is 10

42

operations per second, and that assumes only already known

nanotechnologicaldesigns,whichareprobablyfarfromoptimal.Asinglesucha

computer could simulate the entire mental history of humankind (call this an

ancestor‐simulation) by using less than one millionth of its processing power for

one second. A posthuman

civilization may eventually build an astronomical

numberofsuchcomputers.Wecanconcludethatthecomputingpoweravailable

to a posthuman civilization is sufficient to run a huge number of ancestor‐

simulations even it allocates only a minute fraction of its resources to that

purpose.Wecandrawthisconc lusioneven

whileleavingasubstantialmarginof

errorinallourestimates.

Posthuman civilizations would have enough computing power to run

hugelymanyancestor‐simulationsevenwhileusingonlyatinyfractionof

theirresourcesforthatpurpose.

IV.THECOREOFTHESIMULATIONARGUMENT

Thebasicideaof

thispapercanbeexpressedroughlyasfollows:Iftherewerea

substantialchancethat ourcivilizationwillevergettotheposthumanstageand

run many ancestor‐simulations, then how come you are not living in such a

simulation?

Weshalldevelopthisideaintoarigorousargument.Let

usintroducethe

followingnotation:

P

f

: Fraction of all human‐leveltechnological civilizations that survive to

reachaposthumanstage

N

: Average number of ancestor‐simulations run by a posthuman

civilization

H

:Averagenumberofindividualsthathavelivedinacivilizationbefore

itreachesaposthumanstage

10

100 billion humans

50 years/human

30 million secs/year

[10

14

, 10

17

] operations in each

humanbrainpersecond

[10

33

,10

36

]operations.

7

The actual fraction of all observers with human‐type experiences that live in

simulationsisthen

HHNf

HNf

f

P

P

sim

)(

Writing

I

f for the fraction of posthuman civilizations that are interested in

running ancestor‐simulations (or that contain at leastsome individuals whoare

interested in that and have sufficient resources to run a significant number of

such simulations), and

I

N for the average number of ancestor‐simulations run

bysuchinterestedcivilizations,wehave

II

NfN

andthus:

1)(

IIP

IIP

sim

Nff

Nff

f

(*)

Because of the immense computing power of posthuman civilizations,

I

N is

extremelylarge,aswesawintheprevioussection.Byinspecting(*)wecanthen

seethatatleastoneofthefollowingthreepropositionsmustbetrue:

(1)

0

P

f

(2)

0

I

f

(3)

1

sim

f

V.ABLANDINDIFFERENCEPRINCIPLE

Wecantakeafurtherstepandconcludethatconditionalonthetruthof(3),one’s

credence in the hypothesis that one is in a simulation should be close to unity.

More generally, if we knew that a fraction x of all observers with human

‐type

experiencesliveinsimulations,andwedon’thaveanyinformationthatindicate

that our own particular expe riences are any more or less likely than other

human‐type experiences to have been implemented in vivo rather than in

machina,thenourcredencethatweareinasimulationshouldequal

x:

8

xxfSIMCr

sim

)|(

(#)

This stepis sanctioned bya veryweakindifference principle.Letusdistinguish

twocases.Thefirstcase,whichistheeasiest,iswhereallthemindsinquestion

are like your own in the sense that they are exactly qualitatively identical to

yours:theyhaveexactlythesame

informationandthesameexperiencesthatyou

have.Thesecondcaseiswherethemindsare“like”eachotheronlyintheloose

senseofbeingthesortofmindsthataretypicalofhumancreatures,but theyare

qualitativelydistinctfromoneanotherandeachhasadistinctset

ofexperiences.

I maintain that even in the latter case, where the minds are qualitatively

different, the simulation argument still works, provided that you have no

information that bears on the question of which of the various minds are

simulatedandwhichareimplementedbiologically.

Adetaileddefenseofa

strongerprinciple,whichimpliestheabovestance

forbothcasesastrivialspecialinstances,hasbeengivenintheliterature.

11

Space

doesnotpermita recapitulationofthatdefensehere,but wecanbringoutoneof

the underlying intuitions by bringing to our attentionto an analogous situation

ofamorefamiliarkind.Supposethatx%ofthepopulationhasacertaingenetic

sequence Swithinthe partof

theirDNAcommonlydesignatedas“junkDNA”.

Suppose,further,thattherearenomanifestationsofS(shortofwhatwouldturn

up in a gene assay) and that there areno knowncorrelations between having S

andanyobservablecharacteristic.Then,quiteclearly, unlessyouhavehadyour

DNA sequenced,

it is rational to assign a credence of x% to the hypothesis that

youhaveS.Andthisissoquiteirrespectiveofthefactthatthepeoplewhohave

Shavequalitativelydifferentmindsandexperiencesfromthepeoplewhodon’t

haveS.(Theyaredifferentsimplybecause

allhumanshavedifferentexperiences

from one another, not because of any known link between S and what kind of

experiencesonehas.)

The same reasoning holds if S is not the property of having a certain

genetic sequence but instead the property of being in a simulation, assuming

only that

we have no information that enables us to predict any differences

betweentheexperiences ofsimulatedminds andthoseof the originalbiological

minds.

Itshouldbestressedthattheblandindifferenceprincipleexpressedby(#)

prescribes indifference only between hypotheses aboutwhich observer you are,

when you have no

informationabout which of these observers you are. It does

11

Ine.g.N.Bostrom,“TheDoomsdayargument,Adam&Eve,UN

++

,andQuantumJoe.”Synthese

127(3): 359‐387 (2001); and most fully in my book Anthropic Bias: Observation Selection Effects in

ScienceandPhilosophy,Routledge,NewYork,2002.

9

notingeneralprescribeindifferencebetweenhypotheseswhenyoulackspecific

informationabout whichofthehypothesesistrue.Incontrast to Laplacean and

other more ambitious principles of indifference, it is therefore immune to

Bertrand’s paradox and similar predicaments that tend to plague indifference

principlesofunrestrictedscope.

Readers familiar

with the Doomsday argument

12

may worry that the

bland principle of indifference invoked here is the same assumption that is

responsible for getting the Doomsday argument off the ground, and that the

counterintuitivenessofsomeoftheimplicationsofthelatterincriminatesorcasts

doubtonthevalidityoftheformer.Thisisnot

so.TheDoomsdayargumentrests

on a much stronger and more controversial premiss, namely that one should

reasonas ifone werea randomsample from the set of allpeoplewhowill ever

havelived(past,present,andfuture)eventhoughweknowthatwearelivinginthe

early

twenty‐first century rather than at some point in the distant past or the

future.Theblandindifferenceprinciple,bycontrast,a ppliesonlytocaseswhere

wehavenoinformati onaboutwhichgroupofpeoplewebelongto.

If betting odds provide some guidance to rational belief, it may also

be

worthto ponder that if everybody wereto placea bet on whether they are in a

simulation or not, then if people use the bland principle of indifference, and

consequentlyplacetheirmoneyonbeinginasimulationiftheyknowthatthat’s

wherealmostallpeopleare,then

almosteveryonewillwintheirbets.Iftheybet

onnotbeinginasimulation,thenalmosteveryonewilllose.Itseemsbetterthat

theblandindifferenceprinciplebeheeded.

Further, one can consider a sequence of possible situations in which an

increasing fraction of all people live in simulations:

98%, 99%, 99.9%, 99.9999%,

and so on. As one approaches the limiting case in which everybody is in a

simulation (from which one can deductively infer that one is in a simulation

oneself), it is plausible to require that the credence one assigns to being in a

simulation gradually approach the

limiting case of complete certainty in a

matchingmanner.

VI.INTERPRETATION

The possibility represented by proposition (1) is fairly straightforward. If (1) is

true, then humankind will almost certainly fail to reach a posthuman level; for

virtually no species at our level of development become posthuman, and it is

hardtoseeany justification for thinkingthat our ownspecies will be especially

privileged or protected from future disasters. Conditional on (1), therefore, we

12

Seee.g.J.Leslie,“IstheEndoftheWorldNigh?”PhilosophicalQuarterly40,158:65‐72(1990).

10

must give a high credence to DOOM, the hypothesis that humankind will go

extinctbeforereachingaposthumanlevel:

1)0|(

P

fDOOMCr

One can imagine hypothetical situations were we have such evidence as

would trump knowledge of

P

f

. For example, if we discovered that we were

about to be hit by a giant meteor, this might suggest that we had been

exceptionally unlucky. We could then assign a credence to DOOM larger than

our expectation of the fraction of human‐level civilizations that fail to reach

posthumanity.In

theactualcase,however,weseemtolackevidenceforthinking

thatwearespecialinthisregard,forbetterorworse.

Proposition(1)doesn’tbyitselfimplythatwearelikelytogoextinctsoon,

only that we are unlikely to reach a posthuman stage. This possibility is

compatible

with us remaining at, or somewhat above, our current level of

technologicaldevelopmentfor alongtimebeforegoingextinct.Anotherwayfor

(1)tobetrueisifitislikelythattechnologicalcivilizationwillcollapse.Primitive

humansocietiesmightthenremainonEarthindefinitely.

There are many ways

in which humanity could become extinct before

reachingposthumanity.Perhapsthemostnaturalinterpretationof(1)isthatwe

are likely to go extinct as a result of the development of some powerful but

dangerous technology.

13

One candidate is molecular nanotechnology, which in

its mature stage would enable the construction of self‐replicating nanobots

capable of feeding on dirt and organic matter – a kind of mechanical bacteria.

Suchnanobots,designedformaliciousends,couldcausetheextinctionofalllife

onourplanet.

14

Thesecondalternativeinthesimulationargument’sconclusionisthat the

fraction of posthuman civilizations that are interested in running ancestor‐

simulationisnegligiblysmall.Inorder for (2) tobetrue,theremustbe a strong

convergence among the courses of advanced civilizations. If the number of

ancestor‐simulations

createdbytheinterestedcivilizationsisextremelylarge,the

rarity of such civilizations must be correspondingly extreme. Virtually no

posthuman civilizations decide to use their resources to run large numbers of

ancestor‐simulations. Furthermore, virtually all posthuman civilizations lack

13

Seemypaper“ExistentialRisks:AnalyzingHumanExtinctionScenariosandRelatedHazards.”

Journal of Evolution and Technology, vol. 9 (2001) for a survey and analysis of the present and

anticipatedfuturethreatstohumansurvival.

14

See e.g. Drexler (1985) op cit., and R. A. Freitas Jr., “Some Limits to Global Ecophagy by

Biovorous Nanoreplicators, with Public Policy Recommendations.” Zyvex preprint April (2000),

http://www.foresight.org/NanoRev/Ecophagy.html.

11

individuals who have sufficient resources and interest to run ancestor‐

simulations; or else they have reliably enforced laws that prevent such

individualsfromactingontheirdesires.

What force could bring about such convergence? One can speculate that

advancedcivilizationsalldevelopalongatrajectorythatleadstotherecognition

of an ethical prohibition against running ancestor‐simulations because of the

sufferingthatisinflictedontheinhabitantsofthesimulation.However,fromour

present point of view, it is not clear that creating a human race is immoral. On

the contrary, we tend to view the existence of our race

as constituting a great

ethical value. Moreover, convergence on an ethical view of the immorality of

running ancestor‐simulations is not enough: it must be combined with

convergence on a civilization‐wide social structure that enables activities

consideredimmoraltobeeffectivelybanned.

Another possible convergence point is that almost

all individual

posthumansinvirtuallyallposthumancivilizationsdevelopinadirectionwhere

theylosetheirdesirestorunancestor‐simulations.Thiswouldrequiresignificant

changes to the motivations driving their human predecessors, for there are

certainlymanyhumanswhowouldliketorunancestor‐simulationsiftheycould

afford

todoso.Butperhapsmanyofourhumandesireswillberegardedassilly

by anyone who becomes a posthuman. Maybe the scientific value of ancestor‐

simulations to a posthuman civilization is negligible (which is not too

implausible given its unfathomable intellectual superiority), and maybe

posthumans regard recreational activities as

merely a very inefficient way of

getting pleasure – which can be obtained much more cheaply by direct

stimulationofthebrain’srewardcenters.Oneconclusionthatfollowsfrom(2)is

that posthuman societies will be very different from human societies: they will

not contain relatively wealthy independent agents who

have the full gamut of

human‐likedesiresandarefreetoactonthem.

The possibility expressed by alternative (3) is the conceptually most

intriguing one. If we are living in a simulation, then the cosmos that we are

observing isjust atinypiece ofthetotalityof

physicalexistence. The physics in

theuniversewherethecomputerissituatedthat is running thesimulationmay

ormay not resemble thephysics of theworldthatweobserve. While the world

weseeisinsomesense“real”,itisnotlocatedatthefundamentallevelofreality.

Itmaybepossi bleforsimulatedcivilizationstobecomeposthuman.They

maythenruntheirownancestor‐simulationsonpowerfulcomputerstheybuild

in their simulated universe. Such computers would be “virtual machines”, a

familiar concept in computer science.(Javascript web‐applets,for instance, run

on a virtual machine –

a simulated computer – inside your desktop.) Virtual

machinescan bestacked: it’s possibleto simulatea machine simulating another

12

machine,andsoon,inarbitrarilymanystepsofiteration.Ifwedogoontocreate

ourownancestor‐simulations,thiswouldbestrongevidenceagainst(1)and(2),

andwewouldthereforehavetoconcludethatweliveinasimulation.Moreover,

we would have to suspect that the

posthumans running our simulation are

themselvessimulated beings; and their creators, in turn, may also be simulated

beings.

Reality may thus contain many levels. Even if it is necessary for the

hierarchy to bottom out at some stage – the metaphysical status of this claim is

somewhat obscure – there

may be room for a large number of levels of reality,

and the number could be increasing over time. (One consideration that counts

against the multi‐level hypothesis is that the computational cost for the

basement‐level simulators would be very great. Simulating eve n a single

posthuman civilization might be

prohibitively expensive. If so, then we should

expect our simulation to be terminated when we are about to become

posthuman.)

Although all the elements of such a system can be naturalistic, even

physical,itispossibletodrawsomelooseanalogieswithreligiousconceptionsof

theworld.Insomeways,

theposthumansrunningasimulationare likegodsin

relation to the people inhabiting the simulation: the posthumans created the

world we see; they are of superior intelligence; they are “omnipotent” in the

sense that they can interfere in the workings of our world even in ways that

violate its physical

laws; and they are “omniscient” in the sense that they can

monitoreverythingthathappens.However,allthedemigodsexceptthoseatthe

fundamental level of reality are subject to sanctions by the morepowerful gods

livingatlowerlevels.

Furtherruminationonthesethemescouldclimaxinanaturalistic

theogony

thatwouldstudythestructureofthishierarchy,andtheconstraintsimposedon

its inhabitants by the possibilitythat their actions on their own level may affect

thetreatmenttheyreceivefromdwellersofdeeperlevels.Forexample,ifnobody

can be sure that they are at the basement‐level,

then everybody would have to

consider the possibility that their actions will be rewarded or punished, based

perhaps on moral criteria, by their simulators. An afterlife would be a real

possibility. Because of this fundamental uncertainty, even the basement

civilization may have a reason to behave ethically. The fact that

it has such a

reason for moral behavior would of course add to everybody else’s reason for

behaving morally, and so on, in truly virtuous circle. One might get a kind of

universal ethical imperative, which it would be in everybody’s self‐interest to

obey,asitwere“fromnowhere”.

In

additionto a ncestor‐simulations,one may alsoconsiderthepossibility

of more selective simulations that include only a small group of humans or a

13

single individual. The rest of humanity would then be zombies or “shadow‐

people” – humans simulated only at a level sufficient for the fully simulated

people not to notice anything suspicious. It is not clear how much cheaper

shadow‐peoplewouldbetosimulatethanrealpeople.Itisnoteven

obviousthat

itispossibleforanentitytobehaveindistinguishablyfromarealhumanandyet

lack conscious experience. Even if there are such selective simulations, you

should not think that you are in one of them unless you think they are much

more numerous than complete simulations. There

would have to be about 100

billion times as many “me‐simulations” (simulations of the life of only a single

mind)asthereareancestor‐simulationsinorderformostsimulatedpersonstobe

inme‐simulations.

There is also the possibility of simulators abridging certain parts of the

mental lives

of simulated beings and giving them false memories of the sort of

experiencesthattheywouldtypicallyhavehadduringtheomittedinterval.Ifso,

one can consider the following (farfetched) solution to theproblem of evil: that

thereisnosufferingintheworldandallmemoriesofsuffering

areillusions.Of

course,thishypothesiscanbeseriouslyentertainedonlyatthosetimeswhenyou

arenotcurrentlysuffering.

Supposing we live in a simulation, what are the implications for us

humans? The foregoing remarks notwithstanding, the implications are not all

that radical. Our best guide to how our

posthuman creators have chosen to set

up our world is the standard empirical study of the universe we see. The

revisionstomostpartsofourbeliefnetworkswouldberatherslightandsubtle–

inproportionto our lack of confidence inourability to understand theways of

posthumans.

Properly understood, therefore, the truth of (3) should have no

tendencytomakeus“gocrazy”ortopreventusfromgoingaboutourbusiness

andmakingplansandpredictionsfortomorrow.Thechiefempiricalimportance

of (3) at the current time seems to lie in its role in the

tripartite conclusion

established above.

15

We may hope that (3) is true since that woulddecrease the

probability of (1), although if computational constraints make it likely that

simulators would terminate a simulation before it reaches a posthuman level,

thenoutbesthopewouldbethat(2)istrue.

If we learnmoreabout posthuman

motivations and resource constraints,

maybeasa result of developing towards becoming posthumans ourselves, then

the hypothesis that we are simulated will come to have a much richer set of

empiricalimplications.

15

For some reflections by another author on the consequences of (3), which were sparked by a

privatelycirc ulated earlierversionofthis paper, see R. Hanson, “HowtoLiveina Simulation.”

JournalofEvolutionandTechnology,vol.7(2001).

14

VII.CONCLUSION

A technologically mature “posthuman” civilization would have enormous

computing power. Based on this empirical fact,thesimulation argument shows

that at least one of the following propositions is true: (1) The fractionof human‐

level civilizations that reach a posthuman stage is very close to zero; (2) The

fraction of posthuman civilizations that are interested in running ancestor‐

simulations is very close to zero; (3) The fraction of all people with our kind of

experiencesthatarelivinginasimulationisveryclosetoone.

If (1) is true, then we will almost certainly go extinct before

reaching

posthumanity.If(2)istrue,thentheremustbea strongconvergenceamongthe

courses of advanced civilizations so that virtually none contains any relatively

wealthyindividualswhodesiretorunancestor‐simulationsandarefreetodoso.

If (3) is true, then we almost certainly live in a

simulation. In the dark forest of

our current ignorance, it seems sensible to apportion one’s credence roughly

evenlybetween(1),(2),and(3).

Unless we are now living in a simulation, our descendants will almost

certainlyneverrunanancestor‐simulation.

Acknowledgements

I’m grateful to many people for comments, and

especially to Amara Angelica,

Robert Bradbury, Milan Cirkovic, Robin Hanson, Hal Finney, Robert A. Freitas

Jr., John Leslie, Mitch Porter, Keith DeRose, Mike Treder, Mark Walker, Eliezer

Yudkowsky,andseveralanonymousreferees.

www.nickbostrom.com www.simulation‐argument.com

In this paper, Bostrom describes his **"Simulation Argument"**. This simple argument does not directly state that we live in a simulation but instead leads to a trilemma of three unlikely-seeming propositions, one of which must be true.

One of these three propositions must be true.

1. "The fraction of human-level civilizations that reach a posthuman stage is very close to zero"

2. "The fraction of posthuman civilizations that are interested in running ancestor-simulations is very close to zero"

3. "The fraction of all people with our kind of experiences that are living in a simulation is very close to one"

Read on to learn more about Bostrom's argument.

To dive deeper into this topic: [The Simulation Argument}](http://www.simulation-argument.com/)

There is a lot of debate about whether we will be able to simulate consciousness in machines. The central problem lies in the fact that we have no real understanding of how the brain gives rise to the mind, of how neurons and action potentials create consciousness.

Henry Markram is a neuroscientist who heads the [Blue Brain Project](https://en.wikipedia.org/wiki/Blue_Brain_Project), a project that attempts to create a synthetic brain by reverse-engineering mammalian brain circuitry. The project successfully recreated a single neocortical column of a two-week old rat, which contains about 10,000 neurons (the human brain contains 100 billion neurons). **Markram believes that it will be possible to build a model of the human brain within 10 years.**

This is a very interesting topic and you can dig deeper with these interesting reads:

- [Big Think: Can Computers be Conscious?](http://bigthink.com/going-mental/can-computers-be-conscious)

- [Popular Science: Is A Simulated Brain Conscious?](http://www.popsci.com/article/science/simulated-brain-conscious)

- [What It Will Take for Computers to Be Conscious](https://www.technologyreview.com/s/531146/what-it-will-take-for-computers-to-be-conscious/)

Humans experience the world through their senses. What we call "reality" is what we interact with via our senses and our beliefs. There could be a base reality to which we have no direct access that turns out to be a different "reality" than the one we have access to.

We could go extinct trying to reach posthuman stage. Here is a video where Nick Bostrom goes into more detail about this possibility: [](https://www.youtube.com/watch?v=5LStef_kV6Q "nbi2")

Running ancestor simulations is either banned by future civilizations or posthumans might simply lose interest in creating ancestor simulations.

As you go deeper into the simulation and you consider multi-level simulations (simulations within simulations), the cost of simulating the human history becomes marginal to the cost of simulating machines capable of creating simulations.

The author invokes new physical phenomena yet to be discovered and states that we can only put lower bounds on the posthuman computational power. He believes that the computational power required for the simulation hypothesis is within our reach.

We can easily compute this number of oprations.

Let's assume that:

- H = 107 billion humans have ever lived

- L = 50 years as the average human lifetime

- T = 31,557,600 seconds in a year

- O = a range of [$10^{14}$, $10^{17}$] operations in each human brain per second

$$ H \times L \times T \times O \simeq [10^{33}, 10^{36}] \,\,\mathrm{operations} $$

**Substrate-Independence:** Substrate-Independence is a term used to denote how the mind is a dynamic process and is not tied to a specific set of atoms. The functions of the mind need to carry out their processing on a platform, a substrate. If these functions can be implemented on different processing substrates, then we can call it a Substrate-Independent mind. This simple fact would allow the mind to exist in other forms and it could be transferred to a computer network. A good analogy is to think of programs that are written in platform independent code, they are independent of the architecture where they are running and can be easily transferred.

There are $10^{11}$ neurons in a human brain operate by sending electrical pulses to one another via approximately $10^{15}$ synapses. Assuming 10 impulses/second this suggests that an adult human brain carries out about $10^{16}$ logical operations per second.

You can read more about this topic here: [Energy Limits to the Computational Power of the Human Brain.](http://www.merkle.com/brainLimits.html)

**(1)** $f_p \approx 0 \Rightarrow $ *"The fraction of human-level civilizations that reach a posthuman stage is very close to zero"*

**(2)** $f_I \approx 0 \Rightarrow $*"The fraction of posthuman civilizations that are interested in running ancestor-simulations is very close to zero"*

**(3)** $f_{sim} \approx 1 \Rightarrow $ *"The fraction of all people with our kind of experiences that are living in a simulation is very close to one"*

Couldn't you consider the other limiting case with decreasing fraction of people living in simulations? Can someone explain why the upward bound is more likely than the lower bound. I feel like the hypothesis hinges on this assumption.

This subject gained attention recently due to an interview with Elon Musk at the code conference. Someone asked him if he believed we were living in a simulation and he replied that there is a one in one billion chance that we are living in base reality. You can watch that part of the interview here:

[](https://www.youtube.com/watch?list=PLKof9YSAshgyPqlK-UUYrHfIQaOzFPSL4&v=2KK_kzrJPS8 "Elon Musk interview")

Here is an interview with with Nick Bostrom at the Future of Humanity Institute Oxford University:

[](https://www.youtube.com/watch?v=nnl6nY8YKHs "nick bostrom interview")

Is this the “quite widely accepted position in the philosophy of mind”? I'm probably missing some subtlety in the argument because to me this sounds like “machines can think because machines can think.” My position is that thinking is a physical process and as such it can be carried out by a sufficiently finegrained simulation. I was expecting an argument for or against why thinking is or is not a physical process.

In the late 1980s Thomas K. Landauer performed a study in order to determine the functional capacity of human memory. In the study people were asked to read text, look at pictures, and hear words, short passages of music, sentences, and nonsense syllables. After delays ranging from minutes to several days the subjects were tested to determine how much they had retained. The remarkable result of Landauer's study was that human beings remembered **two bits per second**. Extrapolating this value over a lifetime (say 80 years), this rate of memorization would produce:

$$3600\,\times\,24\,\times\,365.25\,\times\,80\,\times\,2 = 5,049,216,000$$

which is somewhat over **$10^9$ bits for the entire lifetime of a human being**.

You can read the study here: [Thomas K. Landauer: How Much Do People Remember? Some Estimates of the Quantity of Learned Information in Long-term Memory](http://www.cs.colorado.edu/~mozer/Teaching/syllabi/7782/readings/Landauer1986.pdf)

**The Brain in a Vat** thought-experiment is most commonly used to illustrate global or Cartesian skepticism. Imagine that you are a brain hooked up to a sophisticated computer program that can perfectly simulate experiences of the outside world. If you cannot be sure that you are not a brain in a vat, then you cannot rule out the possibility that all that you know about the external world are false. We can construct the following skeptical argument. Let “B” stand for any belief or claim about the external world, for example, the grass is green.

1. If I know that B, then I know that I am not a brain in a vat.

2. I do not know that I am not a brain in a vat.

3. Thus, I do not know that B.

You can read more about it here: [The Brain in a Vat Argument

](http://www.iep.utm.edu/brainvat/)

Ray Kurzweil predicts that by 2029 a personal computer will be 1,000 times more powerful than the human brain.

This chart shows the evolution of computational power over time and Kurzweil's predictions.

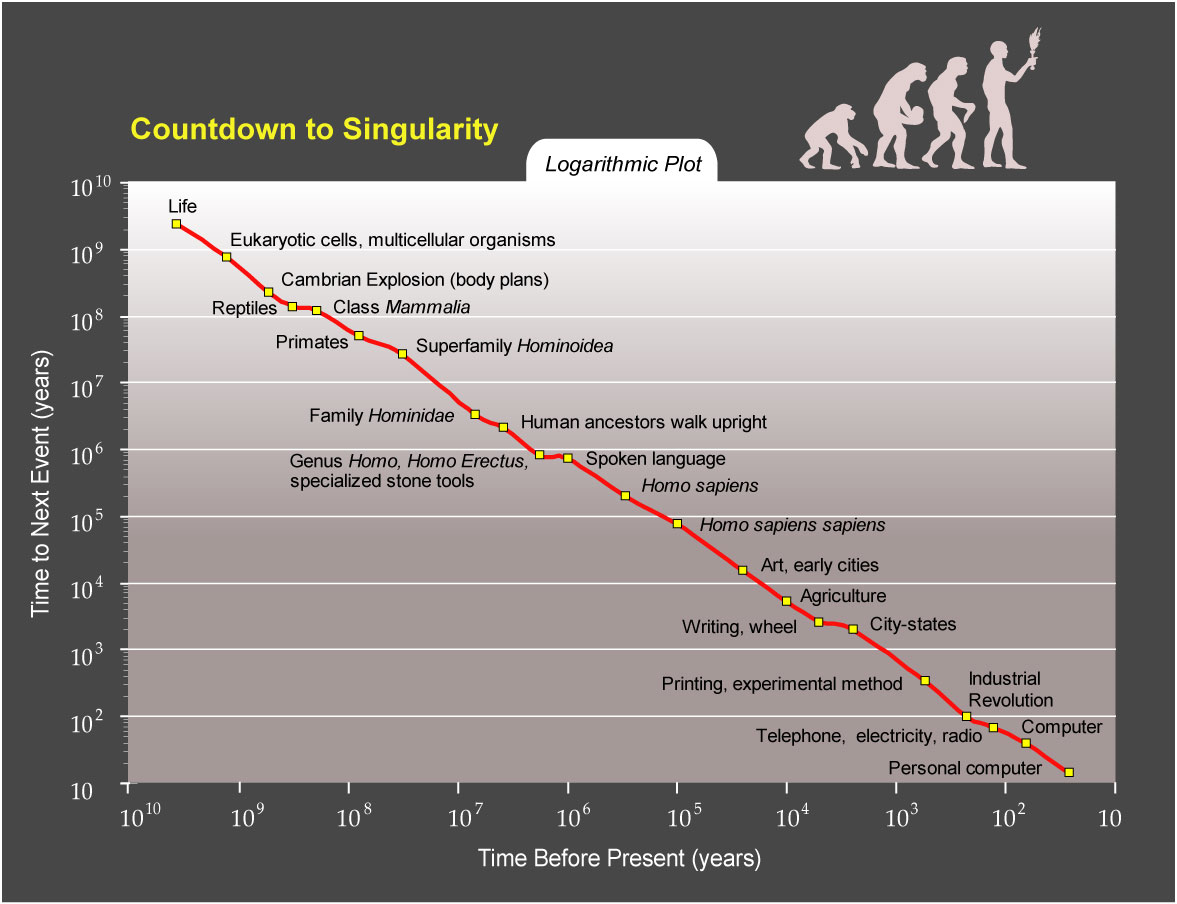

Kurzweil argues that time periods between events in human history shrink exponentially. He believes that we are very close to having the computational power required to simulate a human brain.

The following chart by Kurzweil depicts his law of accelerating returns.

The simulation argument is time independent. It does not matter that it will take 100 or 100,000 years, all that matters is that we reach the posthuman stage before going extinct.

This is an interesting argument against the multi-level hypothesis. This would mean that if we are in a simulation then we ourselves will never achieve the posthuman level and will never be able to create ancestor-simulations.

The author makes a good analogy with religion. **The creators of ancestor-simulations can be seen as Gods.** They are omnipotent and decide when and how to create, and stop the simulations. Assuming we live in multi-level simulations then only the creators in base reality are really omnipotent and omniscient and have control over all the simulations.

**The simulation hypothesis cannot be proven or disproven**. It also cannot matter, it cannot make any difference to us. If it's true, it's not that trees and clouds and other people don't exist, it's just that trees and clouds and other people have a different ultimate nature than we thought.

If we believe that we are in a simulation and that our simulators are in a simulation too then we live in a multi-level simulation. We can safely assume that we are on our way to create our own ancestor-simulations.

Nick Bostrom is a Swedish philosopher at the University of Oxford. He is known for his work on existential risk, the anthropic principle, human enhancement ethics, superintelligence risks, the reversal test, and consequentialism. He authored more than 200 publications including New York Times bestseller *Superintelligence: Paths, Dangers, Strategies*.

You can learn more about Nick on his personal home page: [nickbostrom.com](http://www.nickbostrom.com)

*Thinking is a function of man's immortal soul [they say.] God has given an immortal soul to every man and woman, but not to any other animal or to machines. Hence no animal or machine can think. I am unable to accept any part of this … It appears to me that the argument quoted above implies a serious restriction of the omnipotence of the Almighty. It is admitted that there are certain things that He cannot do such as making one equal to two, but should we not believe that He has freedom to confer a soul on an elephant if He sees fit? ... An argument of exactly similar form may be made for the case of machines.*

** - Alan Turing **

There are mentions to simulated worlds in pop culture that date as far back as the 1950s *[(Time Out of Joint)](https://en.wikipedia.org/wiki/Time_Out_of_Joint)*. It is inherent to our civilization to want to embed itself in virtual, simulated worlds. This argument is gaining weight as we are at the dawn of virtual reality and augmented reality. If we take a look at the evolution of video games we can clearly see that they are becoming more photorealistic and that we feel a need to immerse ourselves in these virtual worlds that depict stories of our past, our present and try to imagine our future.

With the upcoming virtual reality revolution, we should start seeing more and more experiences similar to Second Life which show a real want of humans to embed themselves in simulated realities.

The author develops a probabilistic consideration of the argument of this paper in order to determine whether or not we live in a simulation.